How can the Government finally tackle online abuse?

We investigated solutions to help keep users safe online

The dark side of social media

Social media enables us to connect and communicate with other people. However, according to the TV personality and campaigner Bobby Norris, social media also has a “very dark side” – namely, the abuse that many people receive on these platforms. Bobby started a petition on the issue, and this prompted our inquiry into Tackling Online Abuse.

- Official research found that over a four-week period, 13% of users experienced trolling, 10% offensive or upsetting language, and 9% hate speech or speech encouraging violence.

Abuse affects people differently, but we heard it can impact the mental health of those who receive it and make them feel less willing or able to speak freely online.

Some groups of people are particularly at risk: disabled people, LGBT+ people, people from minority ethnic backgrounds and women are all more likely to be targeted for abuse. Children can also be especially vulnerable to harm from material they see online.

- Research has found that 70% of lesbian, gay and bisexual adults have encountered online harassment, compared to about 40% of straight adults.

- One study found 1 in 10 posts mentioning black women was abusive or problematic, compared to 1 in 15 posts mentioning white women.

A report by the Petitions Committee in 2019 highlighted the failure of social media companies to tackle online abuse, in particular abuse aimed at disabled people.

Since then, many companies have developed new technologies and tools to help keep people safe on their platforms. Yet we heard that progress in making online spaces less toxic has been limited.

We spoke to campaigners who told us companies were still choosing to prioritise user engagement over user safety.

How can the Government and social media platforms address online abuse?

1. The Online Safety Bill

We examined a proposed new law, the Online Safety Bill, that the Government is planning to introduce. The Government has said this new law will help to tackle online abuse.

The Bill will place legal duties on social media and other online platforms that allow UK users to post content such as comments, images or videos, or to talk to others via messaging or forums. These will include requiring them to take steps to protect their users’ safety.

Under the Bill, platforms will have to identify whether they are hosting any content which is illegal or risks harming people. If they are, they will have to take action, including removing illegal content. Platforms will also be required to protect users' freedom of expression. Platforms could face fines if they don’t meet their new responsibilities.

The measures expected to be included in the Bill will force social media platforms to take strong action against content that is illegal or might harm children. But we also want the Bill to set a clear standard of protection that adult users can expect online against abuse that is not illegal but is still potentially harmful to them.

We believe that the Bill should encourage social media companies to consider changing how their platforms work, and the functions and options available to their users, to reduce the risk of abuse being posted or shared in the first place. The Bill should also require them to prioritise tackling abuse aimed at those groups of users most likely to face abuse online.

2. What about changes to criminal law?

To help us consider when abusive behaviour online should be criminalised, we heard from representatives of the Law Commission. This is the body that keeps the law of England and Wales under review and recommends reform where it is needed.

They told us that people can already be prosecuted for sending abuse online. The current laws focus on whether a communication is ‘indecent’ or ‘grossly offensive’. They told us that these definitions are too vague and should be reformed.

The Law Commission has recommended new offences that would make online abuse illegal in some cases. These include:

- a harm-based offence, which would criminalise communications "likely to cause harm to a likely audience". The Commission defines harm as "psychological harm, amounting at least to serious distress";

- threatening communications offences, which would criminalise communications that convey "a threat of serious harm" such as grievous bodily harm or rape.

Some experts suggested to us that these proposed new offences would be more effective than existing laws. We heard they more clearly covered some forms of violence against women and girls committed online, and could allow some cases of online abuse to be punished more severely. We were also told they were more compatible with the right to free speech online.

The Government has indicated that it will consider introducing these new offences. We believe that if these new offences become law, the Government should review them within the first two years. This review should investigate how well the new offences hold people accountable for their behaviour online, and whether they are interfering too much with people’s freedom of speech.

But changes to criminal offences will have little impact if the police can’t enforce the law. Many of the experts we heard from suggested the police don’t have the resources they need to effectively investigate online abuse and trace the people responsible for it. We think the Government should scale up the police’s capacity to do this work.

3. Should we verify people’s online identities?

A big question we encountered was whether people should have to provide an identification document (ID) to use social media. Bobby Norris and Katie Price both suggested that people should have to provide some form of ID, like a passport or driving licence, to start an account.

They argued this would help prevent people who have been banned from the platform from setting up a new account within minutes, as we heard they can currently. It would also ensure the police could easily trace people posting potentially illegal abuse.

We received mixed responses to this idea. Secondary school students we spoke to saw that it could prevent people managing to set up fake/new accounts, but they raised concerns about data privacy and the risk of identity theft. We heard that marginalised groups such as the LGBT+ community and undocumented migrants may be less willing or unable to provide ID for safety reasons.

Rather than making ID verification compulsory, some of the experts we spoke to suggested requiring social media platforms to give all users the option to verify their identity on a voluntary basis, and to block interactions with unverified accounts. We saw this as a helpful tool that people could use to give themselves extra protection from abuse.

What do young people think?

For this inquiry, we heard the views of secondary school students in specially organised sessions held in schools across the UK. Many young people have grown up using social media, and we wanted to hear students' ideas and insights on how the problem of online abuse might be addressed.

Students told us they thought the Government and social media companies need to do much more to tackle online abuse. Their ideas for how this could be done included:

“Social media companies should look into something that’s being reported and have three strikes and then have their accounts blocked.”

“A solution could be that social media companies warn you before you post something that AI thinks might be offensive.”

“People who aren’t your friends on social media shouldn’t be able to add you to group chats.”

“If someone has been found guilty of committing online abuse, social media companies should ban their whole device. This would prevent the person from using the same device to open another account”

“Instead of automated removal of posts, have a filter system for offensive and rude comments/words. This needs to be a manual system to check to see context. Posts need to be able to be appealed.”

You can read their full responses in the annex to our report.

Our recommendations to the Government

Some of the key recommendations in our report are:

1Social media companies should face fines if they cannot demonstrate that they are successfully preventing people who have been banned from the platform for abusive behaviour from setting up new accounts.

2Social media users should have the option to link their account to a form of verified ID, on a voluntary basis, and block interactions with unverified users. This would help tackle abuse posted from anonymous or ‘throwaway’ accounts.

3 The Government’s Online Safety Bill should require social media companies to take steps to protect adult users from the risk of facing abuse on their platforms, which should include – but not be limited to – enforcing their own rules on acceptable content.

4The Online Safety Bill should also require social media platforms to address abuse and hate speech aimed at people on the basis of characteristics including their race, sexuality, gender or disability as a priority. This is appropriate because of the greater levels of abuse aimed at these groups.

5The Government should re-examine whether the police have the resources they need to effectively investigate and enforce laws on online abuse, including being able to trace users who post abuse anonymously.

You can read all of the recommendations we made in our full report.

What happens next?

The Government must now respond to our report.

Our report, Tackling Online Abuse, was published on 1 February 2022.

Detailed information from our inquiry can be found on our website.

If you’re interested in our work, you can find out more on the Petitions website. You can also follow our work on Twitter.

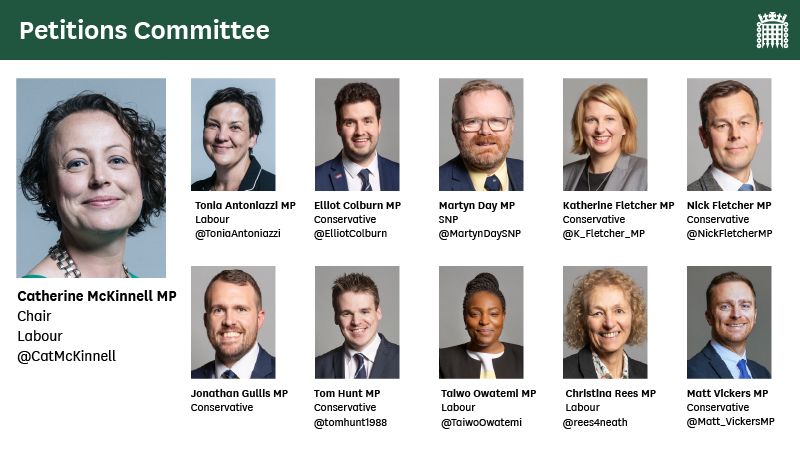

The Petitions Committee is set up by the House of Commons to look at e-petitions submitted on petition.parliament.uk and public (paper) petitions presented to the House of Commons.

Before you go...

Title image credit: Brett Jordan via Unsplash