Aspiration vs reality: the use of AI in autonomous weapon systems

AI and the future of warfare

Four proposals for proceeding with caution

Artificial intelligence (AI) has spread into many areas of life, and defence is no exception. AI has applications across the military sphere, from optimising logistics chains to processing large quantities of intelligence data. There is a growing sense that AI will have a major influence on the future of warfare, and forces around the world are investing heavily in capabilities enabled by AI. Despite these advances, fighting is still largely a human activity.

Bringing AI into the realm of warfare, through the use of AI-enabled weapons systems, could revolutionise defence technology and is one of the most controversial uses of AI today. There has been particular debate about how autonomous weapons can comply with the rules and regulations of armed conflict which exist for humanitarian purposes.

The Government asserts that AI specifically, and science and technology more broadly, is at the heart of its defence capability. They aim to be “ambitious, safe, responsible”. While we agree in principle, aspiration has not lived up to reality.

It is clear from media coverage of our inquiry that there is widespread interest in and concern about the use of AI in autonomous weapons. Achieving democratic endorsement will have several elements, including increasing public understanding of AI and autonomous weapons, enhancing the role of Parliament in decision making on autonomous weapons, and retaining public confidence in the development and use of autonomous weapons.

We therefore make the following proposals, among others in our report, to ensure the Government approaches development and use of AI in autonomous weapons in a way that is ethical and legal, providing key strategic and battlefield benefits, while achieving public understanding and democratic endorsement. "Ambitious, safe, responsible" must be translated into practical implementation.

1

Lead on international engagement of autonomous weapons

The UK, like all states, is bound by obligations under international law with respect to the development and use of new weapons. The use of autonomous weapons in armed conflict is primarily governed by International Humanitarian Law.

The international community has been debating the regulation of autonomous weapons for several years. Outcomes from this debate could be a legally binding treaty or non-binding measures clarifying the application of international humanitarian law - each approach has its advocates. Despite differences on form, the key goal is accelerating efforts to achieving an effective international instrument.

We call for a swift agreement of an effective international instrument on lethal autonomous weapons. It is crucial to develop an international consensus on what criteria should be met in order for a system to be compliant with international humanitarian law. Central to this is the retention of human moral agency. Non-compliant systems should be prohibited. Consistent with its ambitions to promote the safe and responsible development of AI around the world, the Government should be a leader in this effort.

2

Lead international engagement to prohibit the use of AI in nuclear command, control and communications

While advances in AI have the potential to bring greater effectiveness to nuclear command, control and communications, use of AI also has the potential to spur arms races or increase the likelihood of states escalating to nuclear use – either intentionally or accidentally – during a crisis. The compressed time for decision-making when using AI may lead to increased tensions, miscommunication, and misunderstanding. Moreover, an AI tool could be hacked, its training data compromised, or its outputs interpreted as fact when they are statistical correlations, all leading to potentially catastrophic outcomes.

The risks inherent in current AI systems, combined with their enhanced escalatory risk, are of particular concern in the context of nuclear command, control and communications. The Government should lead international efforts to achieve a prohibition on the use of AI in nuclear command, control and communications.

3

Adopt an operational definition of autonomous weapons

There is no single accepted definition of what constitutes an autonomous weapon; and clarifying the term has been a focus of international policymaking for many years. Many states and international organisations have adopted working definitions of what constitutes an autonomous weapon or an autonomous system.

Surprisingly, the UK does not have an operational definition of autonomous weapons. The Ministry of Defence has stated it is cautious about adopting a definition.

“such terms have acquired a meaning beyond their literal interpretation…[and] An overly narrow definition could become quickly outdated in such a complex and fast-moving area and could inadvertently hinder progress in international discussions”

However, we believe it is possible to create a future-proofed definition. Doing so would aid the UK’s ability to make meaningful policy on autonomous weapons and engage fully in discussions in international fora. Other states and organisations have adopted flexible, technology agnostic definitions and we see no good reason why the UK cannot do the same.

In acknowledgement that autonomy exists on a spectrum and can be present in certain critical functions and not others, the Government should adopt operational definitions of 'fully' and 'partially' autonomous weapon systems.

4

Ensure meaningful human control at all stages

Much of the concern about autonomous weapons is focused on systems in which the autonomy is enabled by AI technologies, with an AI system undertaking analysis on information obtained from sensors. However, it is essential to have human control over the deployment of the system both to ensure human moral agency and legal compliance.

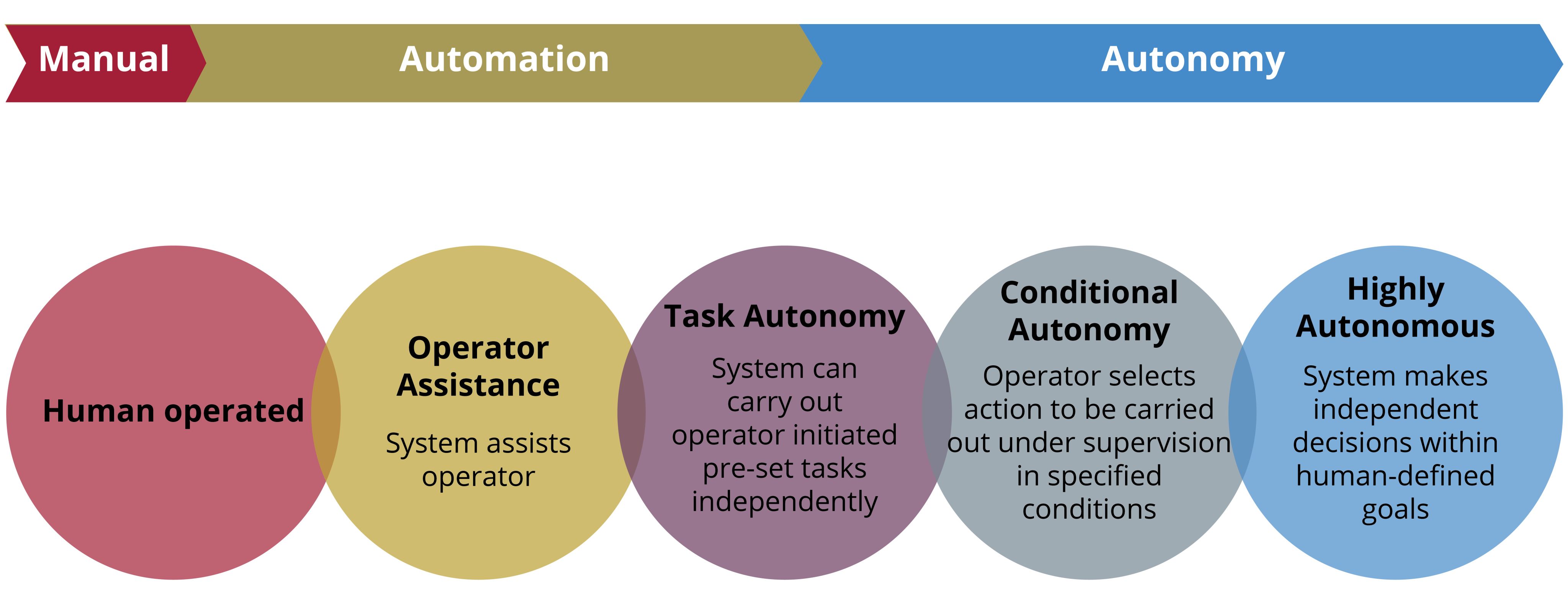

Autonomy exists on a spectrum (see figure below). The required level of human involvement, whether in oversight, verification, or control of actions made by the system, will vary depending upon:

- the design of the system,

- the mission objectives and

- the operational context of where and how the AI system is being used.

Autonomy spectrum framework

Source: Ministry of Defence, Defence Artificial Intelligence Strategy (June 2022), p4

Source: Ministry of Defence, Defence Artificial Intelligence Strategy (June 2022), p4

The Ministry of Defence specifies that AI weapon systems which identify, select and attack targets must have “context-appropriate human involvement”.

Determining whether there is a sufficient degree of human control and setting a minimum level of human involvement in a system involves considering many nuanced factors such as the complexity and transparency of the system, the training of the operators, and physical factors such as when, where and for how long a system is deployed. Nonetheless, it is important that the Ministry of Defence undertakes this task from the design of a system to its deployment.

We note the Ministry of Defence's definition of "context appropriate" and "human involvement". The Government must ensure that human control is consistently embedded at all stages of a systems lifecycle, from design to deployment. This is particularly important for the selection and attacking of targets.

What happens next?

We have made our recommendations to the Government and it now has two months to respond to our report.

Read the full report on our website

Our committee is a special inquiry committee for 2023.

Find out more about our inquiry and our committee.

Follow the committee @HLAIWeapons

Cover image: UK MOD © Crown copyright 2021